writings

Open post the web in the age of ai

the web in the age of ai

Intent surfaces, concurrent React, and MCP-powered agents reshaping the front-end.

Open post the expressiveness of programming languages

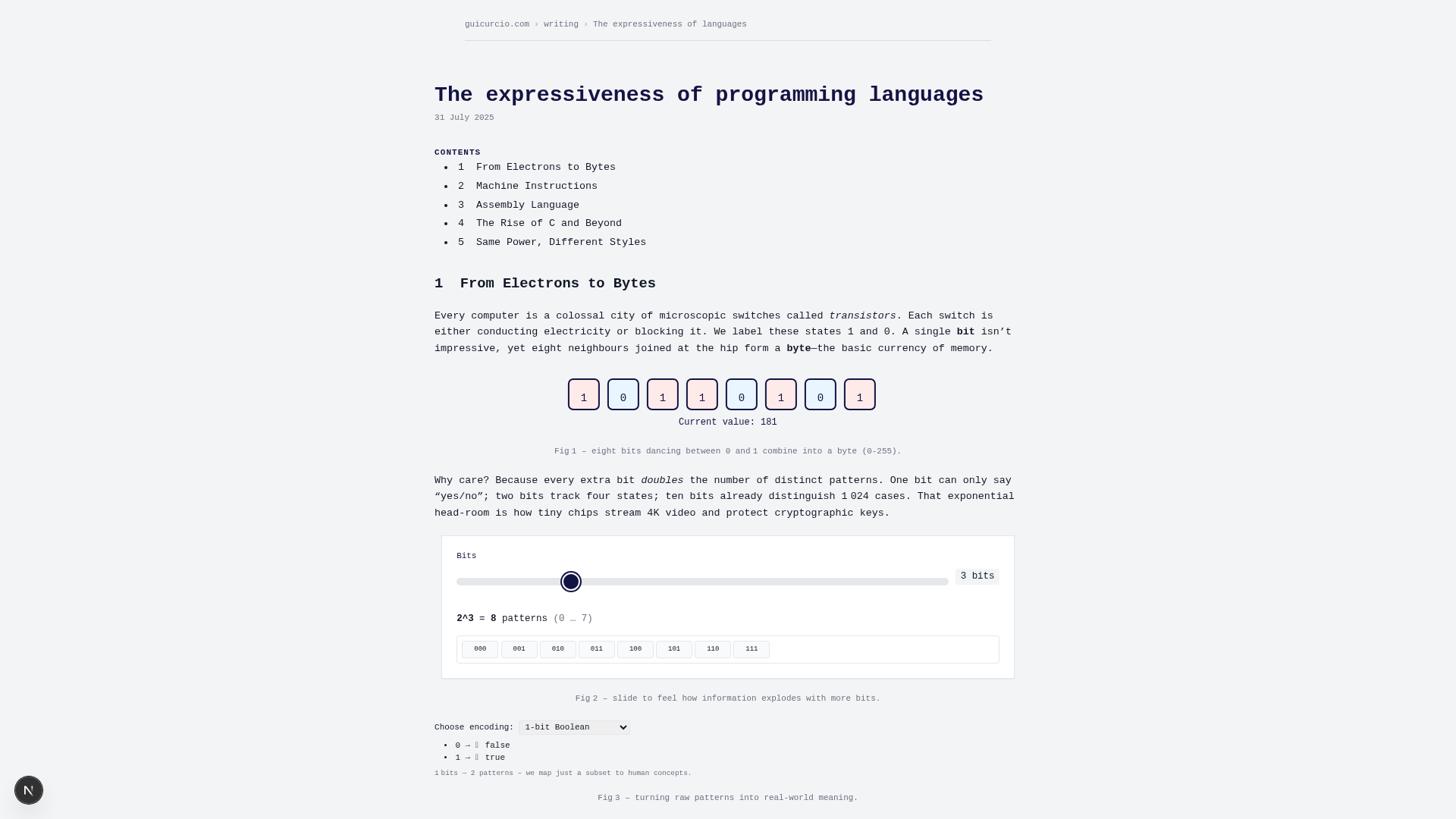

the expressiveness of programming languages

Gwern & Rickard case studies on accidental Turing completeness and AI-friendly language design.

Open post schemas & formalizations

schemas & formalizations

Versioning GraphQL schemas, Hasura metadata, and SpecBundles so agents stay auditable.